실습 따라하기

Ambient Mode

kind : k8s(1.32.2) 배포 , control-plane, worker-node x 2대

#

kind create cluster --name myk8s --image kindest/node:v1.32.2 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000 # Sample Application

hostPort: 30000

- containerPort: 30001 # Prometheus

hostPort: 30001

- containerPort: 30002 # Grafana

hostPort: 30002

- containerPort: 30003 # Kiali

hostPort: 30003

- containerPort: 30004 # Tracing

hostPort: 30004

- containerPort: 30005 # kube-ops-view

hostPort: 30005

- role: worker

- role: worker

networking:

podSubnet: 10.10.0.0/16

serviceSubnet: 10.200.1.0/24

EOF

# 설치 확인

docker ps

# 노드에 기본 툴 설치

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'apt update && apt install tree psmisc lsof ipset wget bridge-utils net-tools dnsutils tcpdump ngrep iputils-ping git vim -y'; echo; done

# for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'DEBIAN_FRONTEND=noninteractive apt install termshark -y'; echo; done

# (옵션) kube-ops-view

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set service.main.type=NodePort,service.main.ports.http.nodePort=30005 --set env.TZ="Asia/Seoul" --namespace kube-system

kubectl get deploy,pod,svc,ep -n kube-system -l app.kubernetes.io/instance=kube-ops-view

## kube-ops-view 접속 URL 확인

open "http://127.0.0.1:30005/#scale=1.5"

open "http://127.0.0.1:30005/#scale=1.3"

kind docker network 에 테스트용 PC(실제로는 컨테이너)와 웹 서버(실제로는 컨테이너) 배포

# kind 설치 시 kind 이름의 도커 브리지가 생성된다 : 172.18.0.0/16 대역

docker network ls

docker inspect kind

# '테스트용 PC(mypc)' 컨테이너 기동 : kind 도커 브리지를 사용하고, 컨테이너 IP를 지정 혹은 지정 없이 배포

docker run -d --rm --name mypc --network kind --ip 172.18.0.100 nicolaka/netshoot sleep infinity # IP 지정 실행 시

혹은 IP 지정 실행 시 에러 발생 시 아래 처럼 IP 지정 없이 실행

docker run -d --rm --name mypc --network kind nicolaka/netshoot sleep infinity # IP 지정 없이 실행 시

docker ps

# '웹서버(myweb1, myweb2)' 컨테이너 기동 : kind 도커 브리지를 사용

# https://hub.docker.com/r/hashicorp/http-echo

# docker run -d --rm --name myweb1 --network kind --ip 172.18.0.101 hashicorp/http-echo -listen=:80 -text="myweb1 server"

# docker run -d --rm --name myweb2 --network kind --ip 172.18.0.102 hashicorp/http-echo -listen=:80 -text="myweb2 server"

# 혹은 IP 지정 실행 시 에러 발생 시 아래 처럼 IP 지정 없이 실행

# docker run -d --rm --name myweb1 --network kind hashicorp/http-echo -listen=:80 -text="myweb1 server"

# docker run -d --rm --name myweb2 --network kind hashicorp/http-echo -listen=:80 -text="myweb2 server"

# docker ps

# kind network 중 컨테이너(노드) IP(대역) 확인

docker ps -q | xargs docker inspect --format '{{.Name}} {{.NetworkSettings.Networks.kind.IPAddress}}'

/myweb2 172.18.0.102

/myweb1 172.18.0.101

/mypc 172.18.0.100

/myk8s-control-plane 172.18.0.2

# 동일한 docker network(kind) 내부에서 컨테이너 이름 기반 도메인 통신 가능 확인!

# docker exec -it mypc curl myweb1

# docker exec -it mypc curl myweb2

#

# docker exec -it mypc curl 172.18.0.101

# docker exec -it mypc curl 172.18.0.102

MetalLB 배포 - Github

# MetalLB 배포

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.14.9/config/manifests/metallb-native.yaml

# 확인

kubectl get crd

kubectl get pod -n metallb-system

# IPAddressPool, L2Advertisement 설정

cat << EOF | kubectl apply -f -

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default

namespace: metallb-system

spec:

addresses:

- 172.18.255.201-172.18.255.220

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default

namespace: metallb-system

spec:

ipAddressPools:

- default

EOF

# 확인

kubectl get IPAddressPool,L2Advertisement -A

istio 1.26.0 설치 : Ambient profile - GettingStarted , install

# myk8s-control-plane 진입 후 설치 진행

docker exec -it myk8s-control-plane bash

-----------------------------------

# istioctl 설치

export ISTIOV=1.26.0

echo 'export ISTIOV=1.26.0' >> /root/.bashrc

curl -s -L https://istio.io/downloadIstio | ISTIO_VERSION=$ISTIOV sh -

cp istio-$ISTIOV/bin/istioctl /usr/local/bin/istioctl

istioctl version --remote=false

client version: 1.26.0

# ambient 프로파일 컨트롤 플레인 배포

istioctl install --set profile=ambient --set meshConfig.accessLogFile=/dev/stdout --skip-confirmation

# istioctl install --set profile=ambient --set meshConfig.enableTracing=true -y

# Install the Kubernetes Gateway API CRDs

kubectl get crd gateways.gateway.networking.k8s.io &> /dev/null || \

kubectl apply -f https://github.com/kubernetes-sigs/gateway-api/releases/download/v1.3.0/standard-install.yaml

# 보조 도구 설치

kubectl apply -f istio-$ISTIOV/samples/addons

kubectl apply -f istio-$ISTIOV/samples/addons # nodePort 충돌 시 한번 더 입력

# 빠져나오기

exit

-----------------------------------

# 설치 확인 : istiod, istio-ingressgateway, crd 등

kubectl get all,svc,ep,sa,cm,secret,pdb -n istio-system

kubectl get crd | grep istio.io

kubectl get crd | grep -v istio | grep -v metallb

kubectl get crd | grep gateways

gateways.gateway.networking.k8s.io 2025-06-01T04:54:23Z

gateways.networking.istio.io 2025-06-01T04:53:51Z

kubectl api-resources | grep Gateway

gatewayclasses gc gateway.networking.k8s.io/v1 false GatewayClass

gateways gtw gateway.networking.k8s.io/v1 true Gateway

gateways gw networking.istio.io/v1 true Gateway

kubectl describe cm -n istio-system istio

...

Data

====

mesh:

----

accessLogFile: /dev/stdout

defaultConfig:

discoveryAddress: istiod.istio-system.svc:15012

defaultProviders:

metrics:

- prometheus

enablePrometheusMerge: true

...

docker exec -it myk8s-control-plane istioctl proxy-status

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

ztunnel-25hpt.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-x4787 1.26.0

ztunnel-4r4d4.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-x4787 1.26.0

ztunnel-9rzzt.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-x4787 1.26.0

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

docker exec -it myk8s-control-plane istioctl ztunnel-config service

# iptables 규칙 확인

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'iptables-save'; echo; done

# NodePort 변경 및 nodeport 30001~30003으로 변경 : prometheus(30001), grafana(30002), kiali(30003), tracing(30004)

kubectl patch svc -n istio-system prometheus -p '{"spec": {"type": "NodePort", "ports": [{"port": 9090, "targetPort": 9090, "nodePort": 30001}]}}'

kubectl patch svc -n istio-system grafana -p '{"spec": {"type": "NodePort", "ports": [{"port": 3000, "targetPort": 3000, "nodePort": 30002}]}}'

kubectl patch svc -n istio-system kiali -p '{"spec": {"type": "NodePort", "ports": [{"port": 20001, "targetPort": 20001, "nodePort": 30003}]}}'

kubectl patch svc -n istio-system tracing -p '{"spec": {"type": "NodePort", "ports": [{"port": 80, "targetPort": 16686, "nodePort": 30004}]}}'

# Prometheus 접속 : envoy, istio 메트릭 확인

open http://127.0.0.1:30001

# Grafana 접속

open http://127.0.0.1:30002

# Kiali 접속 : NodePort

open http://127.0.0.1:30003

# tracing 접속 : 예거 트레이싱 대시보드

open http://127.0.0.1:30004

istio-cni-node 와 ztunnel 데몬셋 파드 정보 확인

istio-cni-node

#

kubectl get ds -n istio-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

istio-cni-node 3 3 3 3 3 kubernetes.io/os=linux 30m

ztunnel 3 3 3 3 3 kubernetes.io/os=linux 29m

#

kubectl get pod -n istio-system -l k8s-app=istio-cni-node -owide

kubectl describe pod -n istio-system -l k8s-app=istio-cni-node

...

Containers:

install-cni:

Container ID: containerd://3be28564d6098b727125c3c493197baf87fa215d4c2847f36fe6075d2daad24a

Image: docker.io/istio/install-cni:1.26.0-distroless

Image ID: docker.io/istio/install-cni@sha256:e69cea606f6fe75907602349081f78ddb0a94417199f9022f7323510abef65cb

Port: 15014/TCP

Host Port: 0/TCP

Command:

install-cni

Args:

--log_output_level=info

State: Running

Started: Sun, 01 Jun 2025 13:54:07 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 100Mi

Readiness: http-get http://:8000/readyz delay=0s timeout=1s period=10s #success=1 #failure=3

Environment Variables from:

istio-cni-config ConfigMap Optional: false

Environment:

REPAIR_NODE_NAME: (v1:spec.nodeName)

REPAIR_RUN_AS_DAEMON: true

REPAIR_SIDECAR_ANNOTATION: sidecar.istio.io/status

ALLOW_SWITCH_TO_HOST_NS: true

NODE_NAME: (v1:spec.nodeName)

GOMEMLIMIT: node allocatable (limits.memory)

GOMAXPROCS: node allocatable (limits.cpu)

POD_NAME: istio-cni-node-xj4x2 (v1:metadata.name)

POD_NAMESPACE: istio-system (v1:metadata.namespace)

Mounts:

/host/etc/cni/net.d from cni-net-dir (rw)

/host/opt/cni/bin from cni-bin-dir (rw)

/host/proc from cni-host-procfs (ro)

/host/var/run/netns from cni-netns-dir (rw)

/var/run/istio-cni from cni-socket-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-srxzl (ro)

/var/run/ztunnel from cni-ztunnel-sock-dir (rw)

...

Volumes:

cni-bin-dir:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni-host-procfs:

Type: HostPath (bare host directory volume)

Path: /proc

HostPathType: Directory

cni-ztunnel-sock-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/ztunnel

HostPathType: DirectoryOrCreate

cni-net-dir:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

cni-socket-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/istio-cni

HostPathType:

cni-netns-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/netns

HostPathType: DirectoryOrCreate

...

# 노드에서 기본 정보 확인

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /opt/cni/bin'; echo; done

-rwxr-xr-x 1 root root 52428984 Jun 1 05:42 istio-cni

...

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /etc/cni/net.d'; echo; done

-rw-r--r-- 1 root root 862 Jun 1 04:54 10-kindnet.conflist

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /var/run/istio-cni'; echo; done

-rw------- 1 root root 2990 Jun 1 05:42 istio-cni-kubeconfig

-rw------- 1 root root 171 Jun 1 04:54 istio-cni.log

srw-rw-rw- 1 root root 0 Jun 1 04:54 log.sock

srw-rw-rw- 1 root root 0 Jun 1 04:54 pluginevent.sock

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /var/run/netns'; echo; done

...

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'lsns -t net'; echo; done

# istio-cni-node 데몬셋 파드 로그 확인

kubectl logs -n istio-system -l k8s-app=istio-cni-node -f

...

ztunnel

# ztunnel 파드 확인 : 파드 이름 변수 지정

kubectl get pod -n istio-system -l app=ztunnel -owide

kubectl get pod -n istio-system -l app=ztunnel

ZPOD1NAME=$(kubectl get pod -n istio-system -l app=ztunnel -o jsonpath="{.items[0].metadata.name}")

ZPOD2NAME=$(kubectl get pod -n istio-system -l app=ztunnel -o jsonpath="{.items[1].metadata.name}")

ZPOD3NAME=$(kubectl get pod -n istio-system -l app=ztunnel -o jsonpath="{.items[2].metadata.name}")

echo $ZPOD1NAME $ZPOD2NAME $ZPOD3NAME

#

kubectl describe pod -n istio-system -l app=ztunnel

...

Containers:

istio-proxy:

Container ID: containerd://d81ca867bfd0c505f062ea181a070a8ab313df3591e599a22706a7f4f537ffc5

Image: docker.io/istio/ztunnel:1.26.0-distroless

Image ID: docker.io/istio/ztunnel@sha256:d711b5891822f4061c0849b886b4786f96b1728055333cbe42a99d0aeff36dbe

Port: 15020/TCP

...

Requests:

cpu: 200m

memory: 512Mi

Readiness: http-get http://:15021/healthz/ready delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

CA_ADDRESS: istiod.istio-system.svc:15012

XDS_ADDRESS: istiod.istio-system.svc:15012

RUST_LOG: info

RUST_BACKTRACE: 1

ISTIO_META_CLUSTER_ID: Kubernetes

INPOD_ENABLED: true

TERMINATION_GRACE_PERIOD_SECONDS: 30

POD_NAME: ztunnel-9rzzt (v1:metadata.name)

POD_NAMESPACE: istio-system (v1:metadata.namespace)

NODE_NAME: (v1:spec.nodeName)

INSTANCE_IP: (v1:status.podIP)

SERVICE_ACCOUNT: (v1:spec.serviceAccountName)

ISTIO_META_ENABLE_HBONE: true

Mounts:

/tmp from tmp (rw)

/var/run/secrets/istio from istiod-ca-cert (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-42r88 (ro)

/var/run/secrets/tokens from istio-token (rw)

/var/run/ztunnel from cni-ztunnel-sock-dir (rw)

...

Volumes:

istio-token:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 43200

istiod-ca-cert:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: istio-ca-root-cert

Optional: false

cni-ztunnel-sock-dir:

Type: HostPath (bare host directory volume)

Path: /var/run/ztunnel

HostPathType: DirectoryOrCreate

...

#

kubectl krew install pexec

kubectl pexec $ZPOD1NAME -it -T -n istio-system -- bash

-------------------------------------------------------

whoami

ip -c addr

ifconfig

iptables -t mangle -S

iptables -t nat -S

ss -tnlp

ss -tnp

ss -xnp

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

u_str ESTAB 0 0 * 44981 * 44982 users:(("ztunnel",pid=1,fd=13),("ztunnel",pid=1,fd=8),("ztunnel",pid=1,fd=6))

u_seq ESTAB 0 0 /var/run/ztunnel/ztunnel.sock 47646 * 46988

u_str ESTAB 0 0 * 44982 * 44981 users:(("ztunnel",pid=1,fd=7))

u_seq ESTAB 0 0 * 46988 * 47646 users:(("ztunnel",pid=1,fd=19))

ls -l /var/run/ztunnel

total 0

srwxr-xr-x 1 root root 0 Jun 1 04:54 ztunnel.sock

# 메트릭 정보 확인

curl -s http://localhost:15020/metrics

# Viewing Istiod state for ztunnel xDS resources

curl -s http://localhost:15000/config_dump

exit

-------------------------------------------------------

# 아래 ztunnel 파드도 확인해보자

kubectl pexec $ZPOD2NAME -it -T -n istio-system -- bash

kubectl pexec $ZPOD3NAME -it -T -n istio-system -- bash

# 노드에서 기본 정보 확인

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node sh -c 'ls -l /var/run/ztunnel'; echo; done

# ztunnel 데몬셋 파드 로그 확인

kubectl logs -n istio-system -l app=ztunnel -f

...

Deploy the sample application - Docs

#

docker exec -it myk8s-control-plane ls -l istio-1.26.0

total 40

-rw-r--r-- 1 root root 11357 May 7 11:05 LICENSE

-rw-r--r-- 1 root root 6927 May 7 11:05 README.md

drwxr-x--- 2 root root 4096 May 7 11:05 bin

-rw-r----- 1 root root 983 May 7 11:05 manifest.yaml

drwxr-xr-x 4 root root 4096 May 7 11:05 manifests

drwxr-xr-x 27 root root 4096 May 7 11:05 samples

drwxr-xr-x 3 root root 4096 May 7 11:05 tools

# Deploy the Bookinfo sample application:

docker exec -it myk8s-control-plane kubectl apply -f istio-1.26.0/samples/bookinfo/platform/kube/bookinfo.yaml

# 확인

kubectl get deploy,pod,svc,ep

docker exec -it myk8s-control-plane istioctl ztunnel-config service

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

docker exec -it myk8s-control-plane istioctl proxy-status

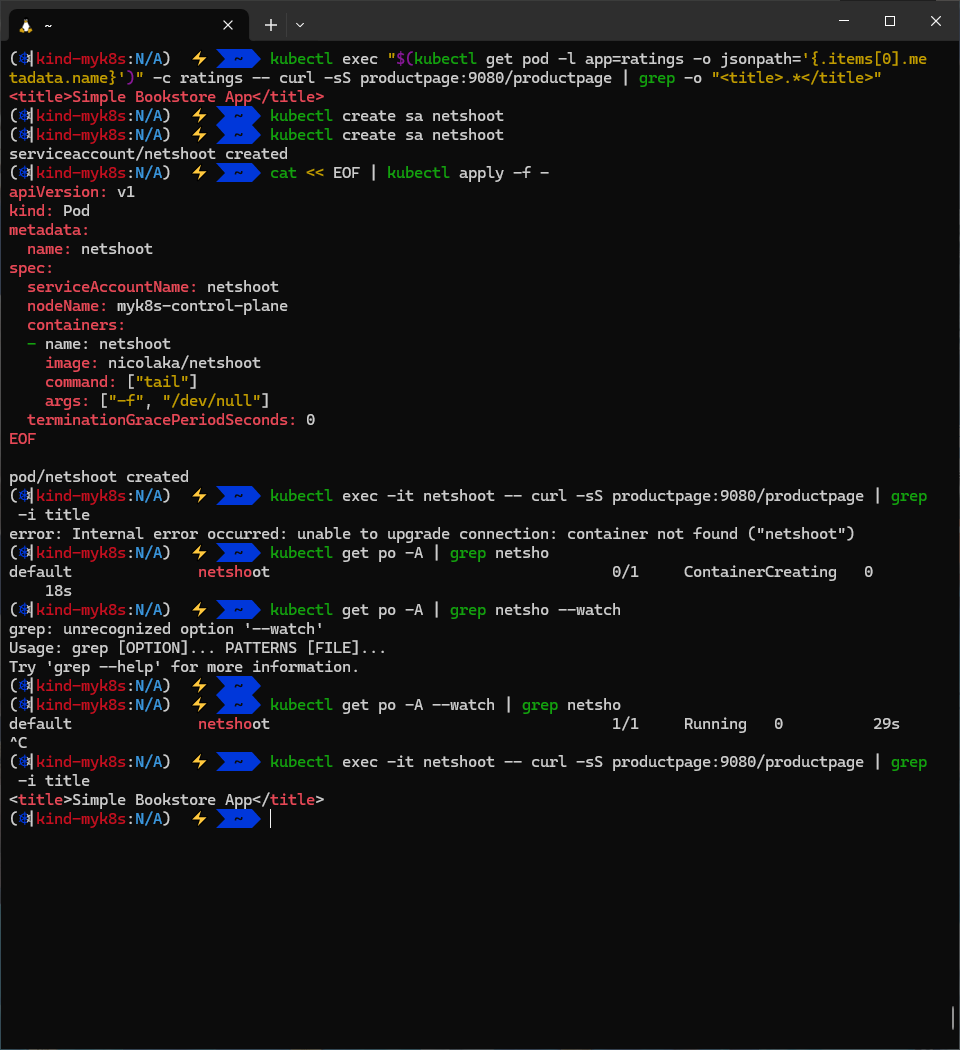

# 통신 확인 : ratings 에서 productpage 페이지

kubectl exec "$(kubectl get pod -l app=ratings -o jsonpath='{.items[0].metadata.name}')" -c ratings -- curl -sS productpage:9080/productpage | grep -o "<title>.*</title>"

# 요청 테스트용 파드 생성 : netshoot

kubectl create sa netshoot

cat << EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: netshoot

spec:

serviceAccountName: netshoot

nodeName: myk8s-control-plane

containers:

- name: netshoot

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 요청 확인

kubectl exec -it netshoot -- curl -sS productpage:9080/productpage | grep -i title

# 반복 요청

while true; do kubectl exec -it netshoot -- curl -sS productpage:9080/productpage | grep -i title ; date "+%Y-%m-%d %H:%M:%S"; sleep 1; done

Open the application to outside traffic

#

docker exec -it myk8s-control-plane cat istio-1.26.0/samples/bookinfo/gateway-api/bookinfo-gateway.yaml

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: bookinfo-gateway

spec:

gatewayClassName: istio

listeners:

- name: http

port: 80

protocol: HTTP

allowedRoutes:

namespaces:

from: Same

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: bookinfo

spec:

parentRefs:

- name: bookinfo-gateway

rules:

- matches:

- path:

type: Exact

value: /productpage

- path:

type: PathPrefix

value: /static

- path:

type: Exact

value: /login

- path:

type: Exact

value: /logout

- path:

type: PathPrefix

value: /api/v1/products

backendRefs:

- name: productpage

port: 9080

docker exec -it myk8s-control-plane kubectl apply -f istio-1.26.0/samples/bookinfo/gateway-api/bookinfo-gateway.yaml

# 확인

kubectl get gateway

NAME CLASS ADDRESS PROGRAMMED AGE

bookinfo-gateway istio 172.18.255.201 True 75s

kubectl get HTTPRoute

NAME HOSTNAMES AGE

bookinfo 101s

kubectl get svc,ep bookinfo-gateway-istio

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/bookinfo-gateway-istio LoadBalancer 10.200.1.122 172.18.255.201 15021:30870/TCP,80:31570/TCP 2m37s

NAME ENDPOINTS AGE

endpoints/bookinfo-gateway-istio 10.10.1.6:15021,10.10.1.6:80 2m37s

kubectl get pod -l gateway.istio.io/managed=istio.io-gateway-controller -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

bookinfo-gateway-istio-6cbd9bcd49-fwqqp 1/1 Running 0 3m45s 10.10.1.6 myk8s-worker2 <none> <none>

# 접속 확인

docker ps

kubectl get svc bookinfo-gateway-istio -o jsonpath='{.status.loadBalancer.ingress[0].ip}'

GWLB=$(kubectl get svc bookinfo-gateway-istio -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

docker exec -it mypc curl $GWLB/productpage -v

docker exec -it mypc curl $GWLB/productpage -I

# 반복 요청 : 아래 mypc 컨테이너에서 반복 요청 계속 해두기!

GWLB=$(kubectl get svc bookinfo-gateway-istio -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

while true; do docker exec -it mypc curl $GWLB/productpage | grep -i title ; date "+%Y-%m-%d %H:%M:%S"; sleep 1; done

# 자신의 로컬 PC에서 접속 시도

kubectl patch svc bookinfo-gateway-istio -p '{"spec": {"type": "LoadBalancer", "ports": [{"port": 80, "targetPort": 80, "nodePort": 30000}]}}'

kubectl get svc bookinfo-gateway-istio

open "http://127.0.0.1:30000/productpage"

# 반복 요청

while true; do curl -s http://127.0.0.1:30000/productpage | grep -i title ; date "+%Y-%m-%d %H:%M:%S"; sleep 1; done

Adding your application to ambient- Docs

- The namespace or pod has the label istio.io/dataplane-mode=ambient

- The pod does not have the opt-out label istio.io/dataplane-mode=none

# 디폴트 네임스페이서 모든 파드들에 ambient mesh 통신 적용 설정

# You can enable all pods in a given namespace to be part of the ambient mesh by simply labeling the namespace:

kubectl label namespace default istio.io/dataplane-mode=ambient

# 파드 정보 확인 : 사이트카가 없다! , 파드 수명 주기에 영향도 없다! -> mTLS 암호 통신 제공, L4 텔레메트리(메트릭) 제공

docker exec -it myk8s-control-plane istioctl proxy-status

kubectl get pod

#

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

NAMESPACE POD NAME ADDRESS NODE WAYPOINT PROTOCOL

default details-v1-766844796b-nfsh8 10.10.2.16 myk8s-worker None HBONE

default netshoot 10.10.0.7 myk8s-control-plane None HBONE

default productpage-v1-54bb874995-xkq54 10.10.2.20 myk8s-worker None HBONE

...

docker exec -it myk8s-control-plane istioctl ztunnel-config workload --address 10.10.2.20

NAMESPACE POD NAME ADDRESS NODE WAYPOINT PROTOCOL

default productpage-v1-54bb874995-xkq54 10.10.2.20 myk8s-worker None HBONE

docker exec -it myk8s-control-plane istioctl ztunnel-config workload --address 10.10.2.20 -o json

[

{

"uid": "Kubernetes//Pod/default/productpage-v1-54bb874995-xkq54",

"workloadIps": [

"10.10.2.20"

],

"protocol": "HBONE",

"name": "productpage-v1-54bb874995-xkq54",

"namespace": "default",

"serviceAccount": "bookinfo-productpage",

"workloadName": "productpage-v1",

"workloadType": "pod",

"canonicalName": "productpage",

"canonicalRevision": "v1",

"clusterId": "Kubernetes",

"trustDomain": "cluster.local",

"locality": {},

"node": "myk8s-worker",

"status": "Healthy",

...

#

PPOD=$(kubectl get pod -l app=productpage -o jsonpath='{.items[0].metadata.name}')

kubectl pexec $PPOD -it -T -- bash

-------------------------------------------------------

iptables-save

iptables -t mangle -S

iptables -t nat -S

ss -tnlp

ss -tnp

ss -xnp

ls -l /var/run/ztunnel

# 메트릭 정보 확인

curl -s http://localhost:15020/metrics | grep '^[^#]'

...

# Viewing Istiod state for ztunnel xDS resources

curl -s http://localhost:15000/config_dump

exit

-------------------------------------------------------

# 노드에서 ipset 확인 : 파드들의 ip를 멤버로 관리 확인

for node in control-plane worker worker2; do echo "node : myk8s-$node" ; docker exec -it myk8s-$node ipset list; echo; done

...

Members:

10.10.2.15 comment "57009539-36bb-42e0-bdac-4c2356fabbd3"

10.10.2.19 comment "64f46320-b85d-4e10-ab97-718d4e282116"

10.10.2.17 comment "b5ff9b4c-722f-48ef-a789-a95485fe9fa8"

10.10.2.18 comment "c6b368f7-6b6f-4d1d-8dbe-4ee85d7c9c22"

10.10.2.16 comment "cd1b1016-5570-4492-ba3a-4299790029d9"

10.10.2.20 comment "2b97238b-3b37-4a13-87a2-70755cb225e6"

# istio-cni-node 로그 확인

kubectl -n istio-system logs -l k8s-app=istio-cni-node -f

...

# ztunnel 파드 로그 모니터링 : IN/OUT 트래픽 정보

kubectl -n istio-system logs -l app=ztunnel -f | egrep "inbound|outbound"

2025-06-01T06:37:49.162266Z info access connection complete src.addr=10.10.2.20:48392 src.workload="productpage-v1-54bb874995-xkq54" src.namespace="default" src.identity="spiffe://cluster.local/ns/default/sa/bookinfo-productpage" dst.addr=10.10.2.18:15008 dst.hbone_addr=10.10.2.18:9080 dst.service="reviews.default.svc.cluster.local" dst.workload="reviews-v2-556d6457d-4r8n8" dst.namespace="default" dst.identity="spiffe://cluster.local/ns/default/sa/bookinfo-reviews" direction="inbound" bytes_sent=602 bytes_recv=192 duration="31ms"

2025-06-01T06:37:49.162347Z info access connection complete src.addr=10.10.2.20:53412 src.workload="productpage-v1-54bb874995-xkq54" src.namespace="default" src.identity="spiffe://cluster.local/ns/default/sa/bookinfo-productpage" dst.addr=10.10.2.18:15008 dst.hbone_addr=10.10.2.18:9080 dst.service="reviews.default.svc.cluster.local" dst.workload="reviews-v2-556d6457d-4r8n8" dst.namespace="default" dst.identity="spiffe://cluster.local/ns/default/sa/bookinfo-reviews" direction="outbound" bytes_sent=192 bytes_recv=602 duration="31ms"

# ztunnel 파드 확인 : 파드 이름 변수 지정

kubectl get pod -n istio-system -l app=ztunnel -owide

kubectl get pod -n istio-system -l app=ztunnel

ZPOD1NAME=$(kubectl get pod -n istio-system -l app=ztunnel -o jsonpath="{.items[0].metadata.name}")

ZPOD2NAME=$(kubectl get pod -n istio-system -l app=ztunnel -o jsonpath="{.items[1].metadata.name}")

ZPOD3NAME=$(kubectl get pod -n istio-system -l app=ztunnel -o jsonpath="{.items[2].metadata.name}")

echo $ZPOD1NAME $ZPOD2NAME $ZPOD3NAME

#

kubectl pexec $ZPOD1NAME -it -T -n istio-system -- bash

-------------------------------------------------------

iptables -t mangle -S

iptables -t nat -S

ss -tnlp

ss -tnp

ss -xnp

ls -l /var/run/ztunnel

# 메트릭 정보 확인

curl -s http://localhost:15020/metrics | grep '^[^#]'

...

# Viewing Istiod state for ztunnel xDS resources

curl -s http://localhost:15000/config_dump

exit

-------------------------------------------------------

# netshoot 파드만 ambient mode 에서 제외해보자

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

kubectl label pod netshoot istio.io/dataplane-mode=none

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

NAMESPACE POD NAME ADDRESS NODE WAYPOINT PROTOCOL

default netshoot 10.10.0.7 myk8s-control-plane None TCP

상세 정보 분석

istio-proxy 정보 확인

#

docker exec -it myk8s-control-plane istioctl proxy-status

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/bookinfo-gateway-istio

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/bookinfo-gateway-istio --waypoint

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/bookinfo-gateway-istio --port 80

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/bookinfo-gateway-istio --port 80 -o json

docker exec -it myk8s-control-plane istioctl proxy-config route deploy/bookinfo-gateway-istio

docker exec -it myk8s-control-plane istioctl proxy-config route deploy/bookinfo-gateway-istio --name http.80

docker exec -it myk8s-control-plane istioctl proxy-config route deploy/bookinfo-gateway-istio --name http.80 -o json

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/bookinfo-gateway-istio

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/bookinfo-gateway-istio --fqdn productpage.default.svc.cluster.local -o json

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/bookinfo-gateway-istio --status healthy

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/bookinfo-gateway-istio --cluster 'outbound|9080||productpage.default.svc.cluster.local' -o json

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/bookinfo-gateway-istio

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/bookinfo-gateway-istio -o json

docker exec -it myk8s-control-plane istioctl proxy-config bootstrap deploy/bookinfo-gateway-istio

ztunnel-config

# A group of commands used to update or retrieve Ztunnel configuration from a Ztunnel instance.

docker exec -it myk8s-control-plane istioctl ztunnel-config

all Retrieves all configuration for the specified Ztunnel pod.

certificate Retrieves certificate for the specified Ztunnel pod.

connections Retrieves connections for the specified Ztunnel pod.

log Retrieves logging levels of the Ztunnel instance in the specified pod.

policy Retrieves policies for the specified Ztunnel pod.

service Retrieves services for the specified Ztunnel pod.

workload Retrieves workload configuration for the specified Ztunnel pod.

...

docker exec -it myk8s-control-plane istioctl ztunnel-config service

NAMESPACE SERVICE NAME SERVICE VIP WAYPOINT ENDPOINTS

default bookinfo-gateway-istio 10.200.1.122 None 1/1

default details 10.200.1.202 None 1/1

default kubernetes 10.200.1.1 None 1/1

default productpage 10.200.1.207 None 1/1

default ratings 10.200.1.129 None 1/1

default reviews 10.200.1.251 None 3/3

...

docker exec -it myk8s-control-plane istioctl ztunnel-config service --service-namespace default --node myk8s-worker

docker exec -it myk8s-control-plane istioctl ztunnel-config service --service-namespace default --node myk8s-worker2

docker exec -it myk8s-control-plane istioctl ztunnel-config service --service-namespace default --node myk8s-worker2 -o json

...

{

"name": "productpage",

"namespace": "default",

"hostname": "productpage.default.svc.cluster.local",

"vips": [

"/10.200.1.207"

],

"ports": {

"9080": 9080

},

"endpoints": {

"Kubernetes//Pod/default/productpage-v1-54bb874995-xkq54": {

"workloadUid": "Kubernetes//Pod/default/productpage-v1-54bb874995-xkq54",

"service": "",

"port": {

"9080": 9080

}

}

},

"ipFamilies": "IPv4"

},

...

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

docker exec -it myk8s-control-plane istioctl ztunnel-config workload --workload-namespace default

docker exec -it myk8s-control-plane istioctl ztunnel-config workload --workload-namespace default --node myk8s-worker2

docker exec -it myk8s-control-plane istioctl ztunnel-config workload --workload-namespace default --node myk8s-worker -o json

...

{

"uid": "Kubernetes//Pod/default/productpage-v1-54bb874995-xkq54",

"workloadIps": [

"10.10.2.20"

],

"protocol": "HBONE",

"name": "productpage-v1-54bb874995-xkq54",

"namespace": "default",

"serviceAccount": "bookinfo-productpage",

"workloadName": "productpage-v1",

"workloadType": "pod",

"canonicalName": "productpage",

"canonicalRevision": "v1",

"clusterId": "Kubernetes",

"trustDomain": "cluster.local",

"locality": {},

"node": "myk8s-worker",

"status": "Healthy",

"hostname": "",

"capacity": 1,

"applicationTunnel": {

"protocol": ""

}

},

...

docker exec -it myk8s-control-plane istioctl ztunnel-config certificate --node myk8s-worker

CERTIFICATE NAME TYPE STATUS VALID CERT SERIAL NUMBER NOT AFTER NOT BEFORE

spiffe://cluster.local/ns/default/sa/bookinfo-details Leaf Available true 6399f0ac1d1f508088c731791930a03a 2025-06-02T06:21:46Z 2025-06-01T06:19:46Z

spiffe://cluster.local/ns/default/sa/bookinfo-details Root Available true 8a432683a288fb4b61d5775dcf47019d 2035-05-30T04:53:58Z 2025-06-01T04:53:58Z

spiffe://cluster.local/ns/default/sa/bookinfo-productpage Leaf Available true f727d33914e23029b3c889c67efe12e1 2025-06-02T06:21:46Z 2025-06-01T06:19:46Z

spiffe://cluster.local/ns/default/sa/bookinfo-productpage Root Available true 8a432683a288fb4b61d5775dcf47019d 2035-05-30T04:53:58Z 2025-06-01T04:53:58Z

spiffe://cluster.local/ns/default/sa/bookinfo-ratings Leaf Available true d1af8176f5047f620d7795a4775869ad 2025-06-02T06:21:46Z 2025-06-01T06:19:46Z

spiffe://cluster.local/ns/default/sa/bookinfo-ratings Root Available true 8a432683a288fb4b61d5775dcf47019d 2035-05-30T04:53:58Z 2025-06-01T04:53:58Z

spiffe://cluster.local/ns/default/sa/bookinfo-reviews Leaf Available true af013ce3f7dca5dc1bead36455155d65 2025-06-02T06:21:46Z 2025-06-01T06:19:46Z

spiffe://cluster.local/ns/default/sa/bookinfo-reviews Root Available true 8a432683a288fb4b61d5775dcf47019d 2035-05-30T04:53:58Z 2025-06-01T04:53:58Z

docker exec -it myk8s-control-plane istioctl ztunnel-config certificate --node myk8s-worker -o json

...

docker exec -it myk8s-control-plane istioctl ztunnel-config connections --node myk8s-worker

WORKLOAD DIRECTION LOCAL REMOTE REMOTE TARGET PROTOCOL

productpage-v1-54bb874995-xkq54.default Inbound productpage-v1-54bb874995-xkq54.default:9080 bookinfo-gateway-istio-6cbd9bcd49-fwqqp.default:33052 HBONE

ratings-v1-5dc79b6bcd-64kcm.default Inbound ratings-v1-5dc79b6bcd-64kcm.default:9080 reviews-v2-556d6457d-4r8n8.default:56440 ratings.default.svc.cluster.local HBONE

reviews-v2-556d6457d-4r8n8.default Outbound reviews-v2-556d6457d-4r8n8.default:41722 ratings-v1-5dc79b6bcd-64kcm.default:15008 ratings.default.svc.cluster.local:9080 HBONE

docker exec -it myk8s-control-plane istioctl ztunnel-config connections --node myk8s-worker --raw

WORKLOAD DIRECTION LOCAL REMOTE REMOTE TARGET PROTOCOL

productpage-v1-54bb874995-xkq54.default Inbound 10.10.2.20:9080 10.10.1.6:33064 HBONE

productpage-v1-54bb874995-xkq54.default Inbound 10.10.2.20:9080 10.10.1.6:33052 HBONE

ratings-v1-5dc79b6bcd-64kcm.default Inbound 10.10.2.15:9080 10.10.2.18:56440 ratings.default.svc.cluster.local HBONE

ratings-v1-5dc79b6bcd-64kcm.default Inbound 10.10.2.15:9080 10.10.2.19:56306 ratings.default.svc.cluster.local HBONE

reviews-v2-556d6457d-4r8n8.default Outbound 10.10.2.18:45530 10.10.2.15:15008 10.200.1.129:9080 HBONE

reviews-v3-564544b4d6-nmf92.default Outbound 10.10.2.19:56168 10.10.2.15:15008 10.200.1.129:9080 HBONE

docker exec -it myk8s-control-plane istioctl ztunnel-config connections --node myk8s-worker -o json

...

{

"state": "Up",

"connections": {

"inbound": [

{

"src": "10.10.2.18:56440",

"originalDst": "ratings.default.svc.cluster.local",

"actualDst": "10.10.2.15:9080",

"protocol": "HBONE"

},

{

"src": "10.10.2.19:56306",

"originalDst": "ratings.default.svc.cluster.local",

"actualDst": "10.10.2.15:9080",

"protocol": "HBONE"

}

],

"outbound": []

},

"info": {

"name": "ratings-v1-5dc79b6bcd-64kcm",

"namespace": "default",

"trustDomain": "",

"serviceAccount": "bookinfo-ratings"

}

},

...

#

docker exec -it myk8s-control-plane istioctl ztunnel-config policy

NAMESPACE POLICY NAME ACTION SCOPE

#

docker exec -it myk8s-control-plane istioctl ztunnel-config log

ztunnel-25hpt.istio-system:

current log level is hickory_server::server::server_future=off,info

...

도식화

Role of Specific Ports

- 15008(HBONE 소켓) : HBONE 프로토콜을 사용하여 HTTP 기반 트래픽을 투명하게 처리합니다.

- 15006(일반 텍스트 소켓) : 포드 간 통신을 위해 메시 내의 암호화되지 않은 트래픽을 관리합니다.

- 15001(아웃바운드 소켓) : 아웃바운드 트래픽을 제어하고 외부 서비스 액세스에 대한 정책을 시행합니다.

- 0x539 마크는 Istio 프록시(예: ztunnel)에서 발생하는 트래픽을 식별합니다.

- 이 마크는 프록시에서 처리된 패킷을 구별하여 재처리되거나 잘못된 경로로 지정되지 않도록 합니다.

- 0x111 마크는 Istio 메시 내의 연결 수준 표시에 사용되며, 이는 프록시에 의해 연결이 처리되었음을 나타냅니다.

- iptables의 CONNMARK 모듈은 이 마크를 전체 연결로 확장하여 후속 패킷 매칭 속도를 높입니다.

새로운 앰비언트 캡처 모델의 최종 결과는 모든 트래픽 캡처와 리디렉션이 포드의 네트워크 네임스페이스 내부에서 발생한다는 것입니다.

노드, CNI 및 기타 모든 것에 대해 포드 내부에 사이드카 프록시가 있는 것처럼 보입니다.

비록 포드에서 사이드카 프록시가 전혀 실행되지 않더라도 말입니다.

productpage 확인

#

PPOD=$(kubectl get pod -l app=productpage -o jsonpath='{.items[0].metadata.name}')

kubectl pexec $PPOD -it -T -- bash

-------------------------------------------------------

iptables-save

...

# DNS 트래픽에 대해 연결 추적을 설정, 이후 마크 기반으로 리디렉션 여부를 제어

iptables -t raw -S

...

-A PREROUTING -j ISTIO_PRERT

-A OUTPUT -j ISTIO_OUTPUT

-A ISTIO_OUTPUT -p udp -m mark --mark 0x539/0xfff -m udp --dport 53 -j CT --zone 1

-A ISTIO_PRERT -p udp -m mark ! --mark 0x539/0xfff -m udp --sport 53 -j CT --zone 1

# 특정 마크가 붙은 트래픽을 식별해서 추후 NAT에서 건너뛰게 함

iptables -t mangle -S

...

## 들어오는 패킷을 ISTIO_PRERT 체인으로 전달

-A PREROUTING -j ISTIO_PRERT

## 로컬 생성 트래픽도 마찬가지로 처리

-A OUTPUT -j ISTIO_OUTPUT

## 연결 추적에 저장된 마크를 복원

-A ISTIO_OUTPUT -m connmark --mark 0x111/0xfff -j CONNMARK --restore-mark --nfmask 0xffffffff --ctmask 0xffffffff

## 마크가 특정 값일 경우 새로운 마크로 설정

-A ISTIO_PRERT -m mark --mark 0x539/0xfff -j CONNMARK --set-xmark 0x111/0xfff

# 트래픽 리디렉션 실행

iptables -t nat -S

...

## 특정 IP 예외 처리 (프록시 자체나 메타데이터 주소 등)

-A ISTIO_OUTPUT -d 169.254.7.127/32 -p tcp -m tcp -j ACCEPT

## DNS 요청(udp/53) 을 15053 포트로 리디렉션

-A ISTIO_OUTPUT ! -o lo -p udp -m mark ! --mark 0x539/0xfff -m udp --dport 53 -j REDIRECT --to-ports 15053

## TCP 기반 DNS 요청 도 15053으로 리디렉션

-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -p tcp -m tcp --dport 53 -m mark ! --mark 0x539/0xfff -j REDIRECT --to-ports 15053

## 특정 마크가 붙은 트래픽은 리디렉션에서 제외

-A ISTIO_OUTPUT -p tcp -m mark --mark 0x111/0xfff -j ACCEPT

## 루프백 인터페이스로 향하는 트래픽은 건너뜀

-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -o lo -j ACCEPT

## 나머지 TCP 트래픽은 15001 로 리디렉션

-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -p tcp -m mark ! --mark 0x539/0xfff -j REDIRECT --to-ports 15001

## 특정 메타데이터 IP에서 온 트래픽은 리디렉션 예외

-A ISTIO_PRERT -s 169.254.7.127/32 -p tcp -m tcp -j ACCEPT

## 들어오는 외부 TCP 트래픽을 inbound 포트(15006) 로 리디렉션

-A ISTIO_PRERT ! -d 127.0.0.1/32 -p tcp -m tcp ! --dport 15008 -m mark ! --mark 0x539/0xfff -j REDIRECT --to-ports 15006

# 요약

## DNS 트래픽 → 15053 포트로 리디렉션 (Envoy가 DNS를 프록시)

## TCP 트래픽 (출발지/도착지에 따라) → 15001 또는 15006 포트로 리디렉션

## 특정 마크 (0x539, 0x111)나 주소는 리디렉션 제외

## conntrack 및 mark 시스템으로 트래픽 상태 관리 및 리디렉션 조건 제어

# 15001,15006,15008 확인

ss -tnp

ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 127.0.0.1:15053 0.0.0.0:*

LISTEN 0 128 [::1]:15053 [::]:*

LISTEN 0 128 *:15001 *:*

LISTEN 0 128 *:15006 *:*

LISTEN 0 128 *:15008 *:*

LISTEN 0 2048 *:9080 *:* users:(("gunicorn",pid=18,fd=5),("gunicorn",pid=17,fd=5),("gunicorn",pid=16,fd=5),("gunicorn",pid=15,fd=5),("gunicorn",pid=14,fd=5),("gunicorn",pid=13,fd=5),("gunicorn",pid=12,fd=5),("gunicorn",pid=11,fd=5),("gunicorn",pid=1,fd=5))

# 암호화 확인

tcpdump -i eth0 -A -s 0 -nn 'tcp port 15008'

apk update && apk add ngrep

ngrep -tW byline -d eth0 '' 'tcp port 15008'

#

ngrep -tW byline -d eth0 '' 'tcp port 15001'

ngrep -tW byline -d eth0 '' 'tcp port 15006'

#

ls -l /var/run/ztunnel

exit

-------------------------------------------------------

Verify mutual TLS is enabled - Docs

Validate mTLS using workload’s ztunnel configurations

# PROTOCOL HBONE 확인

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

NAMESPACE POD NAME ADDRESS NODE WAYPOINT PROTOCOL

default bookinfo-gateway-istio-6cbd9bcd49-6cphf 10.10.2.6 myk8s-worker2 None TCP

default details-v1-766844796b-jd7n5 10.10.1.11 myk8s-worker None HBONE

...

Validate mTLS from metrics

istio_tcp_connections_opened_total{

app="ztunnel",

connection_security_policy="mutual_tls",

destination_principal="spiffe://cluster.local/ns/default/sa/bookinfo-details",

destination_service="details.default.svc.cluster.local",

reporter="source",

request_protocol="tcp",

response_flags="-",

source_app="curl",

source_principal="spiffe://cluster.local/ns/default/sa/curl",source_workload_namespace="default",

...}

Validate mTLS from logs

mTLS가 활성화되었는지 확인하기 위해 소스 또는 대상 ztunnel 로그를 피어 ID와 함께 확인

#

kubectl -n istio-system logs -l app=ztunnel | grep -E "inbound|outbound"

2024-08-21T15:32:05.754291Z info access connection complete src.addr=10.42.0.9:33772 src.workload="curl-7656cf8794-6lsm4" src.namespace="default"

src.identity="spiffe://cluster.local/ns/default/sa/curl" dst.addr=10.42.0.5:15008 dst.hbone_addr=10.42.0.5:9080 dst.service="details.default.svc.cluster.local"

dst.workload="details-v1-857849f66-ft8wx" dst.namespace="default" dst.identity="spiffe://cluster.local/ns/default/sa/bookinfo-details"

direction="outbound" bytes_sent=84 bytes_recv=358 duration="15ms"

...

Validate with Kiali dashboard

Validate with tcpdump

- If you have access to your Kubernetes worker nodes, you can run the tcpdump command to capture all traffic on the network interface, with optional focusing the application ports and HBONE port.

- In this example, port 9080 is the details service port and 15008 is the HBONE port:

# tcpdump -nAi eth0 port 9080 or port 15008

#

DPOD=$(kubectl get pods -l app=details -o jsonpath="{.items[0].metadata.name}")

echo $DPOD

#

kubectl pexec $DPOD -it -T -- sh -c 'tcpdump -nAi eth0 port 9080 or port 15008'

...

Secure Application Access : L4 Authorization Policy - Docs

- Istio의 보안 정책의 레이어 4(L4) 기능은 ztunnel에서 지원되며 앰비언트 모드로 사용할 수 있습니다. 또한 클러스터에 이를 지원하는 CNI 플러그인이 있는 경우에도 Kubernetes 네트워크 정책은 계속 작동하며 심층적인 방어 기능을 제공하는 데 사용할 수 있습니다.

- ztunnel 및 웨이포인트 프록시의 계층화를 통해 주어진 워크로드에 대해 레이어 7(L7) 처리를 활성화할지 여부를 선택할 수 있습니다. L7 정책과 Istio의 트래픽 라우팅 기능을 사용하려면 워크로드에 웨이포인트를 배포할 수 있습니다. 이제 정책을 두 곳에서 시행할 수 있기 때문에 이해해야 할 사항이 있습니다.

# netshoot 파드만 ambient mode 다시 참여

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

kubectl label pod netshoot istio.io/dataplane-mode=ambient --overwrite

docker exec -it myk8s-control-plane istioctl ztunnel-config workload

# L4 Authorization Policy 신규 생성

# Explicitly allow the netshoot and gateway service accounts to call the productpage service:

kubectl apply -f - <<EOF

apiVersion: security.istio.io/v1

kind: AuthorizationPolicy

metadata:

name: productpage-viewer

namespace: default

spec:

selector:

matchLabels:

app: productpage

action: ALLOW

rules:

- from:

- source:

principals:

- cluster.local/ns/default/sa/netshoot

EOF

# L4 Authorization Policy 생성 확인

kubectl get authorizationpolicy

NAME AGE

productpage-viewer 8s

# ztunnel 파드 로그 모니터링

kubectl logs ds/ztunnel -n istio-system -f | grep -E RBAC

# L4 Authorization Policy 동작 확인

## 차단 확인!

GWLB=$(kubectl get svc bookinfo-gateway-istio -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

while true; do docker exec -it mypc curl $GWLB/productpage | grep -i title ; date "+%Y-%m-%d %H:%M:%S"; sleep 1; done

## 허용 확인!

kubectl exec -it netshoot -- curl -sS productpage:9080/productpage | grep -i title

while true; do kubectl exec -it netshoot -- curl -sS productpage:9080/productpage | grep -i title ; date "+%Y-%m-%d %H:%M:%S"; sleep 1; done

# L4 Authorization Policy 업데이트

kubectl apply -f - <<EOF

apiVersion: security.istio.io/v1

kind: AuthorizationPolicy

metadata:

name: productpage-viewer

namespace: default

spec:

selector:

matchLabels:

app: productpage

action: ALLOW

rules:

- from:

- source:

principals:

- cluster.local/ns/default/sa/netshoot

- cluster.local/ns/default/sa/bookinfo-gateway-istio

EOF

kubectl logs ds/ztunnel -n istio-system -f | grep -E RBAC

# 허용 확인!

GWLB=$(kubectl get svc bookinfo-gateway-istio -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

while true; do docker exec -it mypc curl $GWLB/productpage | grep -i title ; date "+%Y-%m-%d %H:%M:%S"; sleep 1; done

Allowed policy attributes : This list of attributes determines whether a policy is considered L4-only

| Type | Attribute | Positive match | Negative match |

| Source | Peer identity | principals | notPrincipals |

| Source | Namespace | namespaces | notNamespaces |

| Source | IP block | ipBlocks | notIpBlocks |

| Operation | Destination port | ports | notPorts |

| Condition | Source IP | source.ip | n/a |

| Condition | Source namespace | source.namespace | n/a |

| Condition | Source identity | source.principal | n/a |

| Condition | Remote IP | destination.ip | n/a |

| Condition | Remote port | destination.port | n/a |

Configure waypoint proxies - Docs

- 웨이포인트 프록시는 정의된 워크로드 집합에 레이어 7(L7) 처리를 추가하기 위해 Envoy 기반 프록시를 선택적으로 배포하는 것입니다.

- 웨이포인트 프록시는 애플리케이션과 독립적으로 설치, 업그레이드 및 확장되며, 애플리케이션 소유자는 이러한 프록시의 존재를 인식하지 않아야 합니다. 각 워크로드와 함께 에너포 프록시 인스턴스를 실행하는 사이드카 데이터 플레인 모드에 비해 필요한 프록시 수를 크게 줄일 수 있습니다.

- 웨이포인트 또는 웨이포인트 세트는 보안 경계가 비슷한 애플리케이션 간에 공유할 수 있습니다. 이는 특정 워크로드의 모든 인스턴스 또는 네임스페이스의 모든 워크로드일 수 있습니다.

- 사이드카 모드와는 달리, 앰비언트 모드에서는 정책이 목적지 경유지에 의해 시행됩니다. 여러 면에서 경유지는 자원(이름 공간, 서비스 또는 포드)으로 가는 관문 역할을 합니다. Istio는 자원으로 들어오는 모든 트래픽이 경유지를 통과하도록 강제하며, 이는 그 자원에 대한 모든 정책을 강제합니다.

Do you need a waypoint proxy?

- 앰비언트의 레이어드 접근 방식을 통해 사용자는 no mesh에서 보안 L4 오버레이, 전체 L7 처리로 원활하게 전환하면서 Istio를 보다 점진적인 방식으로 채택할 수 있습니다.

- 앰비언트 모드의 대부분의 기능은 ztunnel 노드 프록시에 의해 제공됩니다. ztunnel은 계층 4(L4)에서만 트래픽을 처리하도록 설계되어 공유 구성 요소로 안전하게 작동할 수 있습니다.

- 웨이포인트로 리디렉션을 구성하면 트래픽이 ztunnel을 통해 해당 웨이포인트로 전달됩니다. 애플리케이션에 다음 L7 메쉬 기능이 필요한 경우 웨이포인트 프록시를 사용해야 합니다:

- Traffic management: HTTP routing & load balancing, circuit breaking, rate limiting, fault injection, retries, timeouts

- Security: Rich authorization policies based on L7 primitives such as request type or HTTP header

- Observability: HTTP metrics, access logging, tracing

Deploy a waypoint proxy

# istioctl can generate a Kubernetes Gateway resource for a waypoint proxy.

# For example, to generate a waypoint proxy named waypoint for the default namespace that can process traffic for services in the namespace:

kubectl describe pod bookinfo-gateway-istio-6cbd9bcd49-6cphf | grep 'Service Account'

Service Account: bookinfo-gateway-istio

# Generate a waypoint configuration as YAML

docker exec -it myk8s-control-plane istioctl waypoint generate -h

# --for string Specify the traffic type [all none service workload] for the waypoint

istioctl waypoint generate --for service -n default

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

labels:

istio.io/waypoint-for: service

name: waypoint

namespace: default

spec:

gatewayClassName: istio-waypoint

listeners:

- name: mesh

port: 15008

protocol: HBONE

#

docker exec -it myk8s-control-plane istioctl waypoint apply -n default

✅ waypoint default/waypoint applied

kubectl get gateway

kubectl get gateway waypoint -o yaml

...

#

kubectl get pod -l service.istio.io/canonical-name=waypoint -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

waypoint-66b59898-p7v5x 1/1 Running 0 2m15s 10.10.1.21 myk8s-worker <none> <none>

#

docker exec -it myk8s-control-plane istioctl waypoint list

NAME REVISION PROGRAMMED

waypoint default True

docker exec -it myk8s-control-plane istioctl waypoint status

NAMESPACE NAME STATUS TYPE REASON MESSAGE

default waypoint True Programmed Programmed Resource programmed, assigned to service(s) waypoint.default.svc.cluster.local:15008

docker exec -it myk8s-control-plane istioctl proxy-status

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

bookinfo-gateway-istio-6cbd9bcd49-6cphf.default Kubernetes SYNCED (5m5s) SYNCED (5m5s) SYNCED (5m4s) SYNCED (5m5s) IGNORED istiod-86b6b7ff7-gmtdw 1.26.0

waypoint-66b59898-p7v5x.default Kubernetes SYNCED (5m4s) SYNCED (5m4s) IGNORED IGNORED IGNORED istiod-86b6b7ff7-gmtdw 1.26.0

ztunnel-52d22.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-gmtdw 1.26.0

ztunnel-ltckp.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-gmtdw 1.26.0

ztunnel-mg4mn.istio-system Kubernetes IGNORED IGNORED IGNORED IGNORED IGNORED istiod-86b6b7ff7-gmtdw 1.26.0

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/waypoint

RESOURCE NAME TYPE STATUS VALID CERT SERIAL NUMBER NOT AFTER NOT BEFORE

default Cert Chain ACTIVE true 4346296f183e559a876c0410cb154f50 2025-06-02T10:11:45Z 2025-06-01T10:09:45Z

ROOTCA CA ACTIVE true aa779aa04b241aedaa8579c0bc5c3b5f 2035-05-30T09:19:54Z 2025-06-01T09:19:54Z

#

kubectl pexec waypoint-66b59898-dlrj4 -it -T -- bash

----------------------------------------------

ip -c a

curl -s http://localhost:15020/stats/prometheus

ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 4096 127.0.0.1:15000 0.0.0.0:* users:(("envoy",pid=18,fd=18))

LISTEN 0 4096 0.0.0.0:15008 0.0.0.0:* users:(("envoy",pid=18,fd=35))

LISTEN 0 4096 0.0.0.0:15008 0.0.0.0:* users:(("envoy",pid=18,fd=34))

LISTEN 0 4096 0.0.0.0:15021 0.0.0.0:* users:(("envoy",pid=18,fd=23))

LISTEN 0 4096 0.0.0.0:15021 0.0.0.0:* users:(("envoy",pid=18,fd=22))

LISTEN 0 4096 0.0.0.0:15090 0.0.0.0:* users:(("envoy",pid=18,fd=21))

LISTEN 0 4096 0.0.0.0:15090 0.0.0.0:* users:(("envoy",pid=18,fd=20))

LISTEN 0 4096 *:15020 *:* users:(("pilot-agent",pid=1,fd=11))

ss -tnp

ss -xnlp

ss -xnp

exit

----------------------------------------------

Istio Ambient Mesh : Kiali 의 Ambient Mesh 지원 내용

Secure Application Access : L7 Authorization Policy - Docs ⇒ 아직 미완성

Using the Kubernetes Gateway API, you can deploy a waypoint proxy for the productpage service that uses the bookinfo-productpage service account. Any traffic going to the service will be mediated, enforced and observed by the Layer 7 (L7) proxy.

# productpage service를 위한 waypoint proxy 배포

# Deploy a waypoint proxy for the productpage service:

istioctl x waypoint apply --service-account bookinfo-productpage

# gateway.networking.k8s.io 정보 확인

# View the productpage waypoint proxy status;

## you should see the details of the gateway resource with Programmed status:

kubectl get gtw

NAME CLASS ADDRESS PROGRAMMED AGE

bookinfo-productpage istio-waypoint 10.100.176.97 True 18m

kubectl get gtw bookinfo-productpage -o yaml | kubectl neat

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

annotations:

gateway.istio.io/controller-version: "5"

istio.io/for-service-account: bookinfo-productpage

name: bookinfo-productpage

namespace: default

spec:

gatewayClassName: istio-waypoint

listeners:

- allowedRoutes:

namespaces:

from: Same

name: mesh

port: 15008

protocol: HBONE

# productpage service를 위한 waypoint proxy 파드 확인

kubectl get pod -l service.istio.io/canonical-name=bookinfo-productpage-istio-waypoint

NAME READY STATUS RESTARTS AGE

bookinfo-productpage-istio-waypoint-858c5b9664-rp7jl 1/1 Running 0 79s

# waypoint proxy 파드 내의 정보 확인

kubectl exec -it deploy/bookinfo-productpage-istio-waypoint -- ls /etc/istio/proxy/

XDS envoy-rev.json grpc-bootstrap.json

kubectl exec -it deploy/bookinfo-productpage-istio-waypoint -- cat /etc/istio/proxy/grpc-bootstrap.json | jq

kubectl exec -it deploy/bookinfo-productpage-istio-waypoint -- cat /etc/istio/proxy/envoy-rev.json | jq

# 기존 productpage-viewer 이름의 AuthorizationPolicy 정책을 L7(GET 허용) 업데이트

# Update our AuthorizationPolicy to explicitly allow the sleep and gateway service accounts to GET the productpage service, but perform no other operations:

kubectl apply -f - <<EOF

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: productpage-viewer

namespace: default

spec:

targetRef:

kind: Gateway

group: gateway.networking.k8s.io

name: bookinfo-productpage

action: ALLOW

rules:

- from:

- source:

principals:

- cluster.local/ns/default/sa/sleep

- cluster.local/$GATEWAY_SERVICE_ACCOUNT

to:

- operation:

methods: ["GET"]

EOF

# AuthorizationPolicy 확인

kubectl get AuthorizationPolicy

NAME AGE

productpage-viewer 25m

# proxy-config 를 통해 상세 정보 확인

istioctl proxy-config listener deploy/bookinfo-productpage-istio-waypoint --waypoint

LISTENER CHAIN MATCH DESTINATION

envoy://connect_originate ALL Cluster: connect_originate

envoy://main_internal inbound-vip|9080||productpage.default.svc.cluster.local-http ip=10.100.248.201 -> port=9080 Cluster: inbound-vip|9080|http|productpage.default.svc.cluster.local

envoy://main_internal direct-tcp ip=192.168.1.221 -> ANY Cluster: encap

envoy://main_internal direct-http ip=192.168.1.221 -> application-protocol='h2c' Cluster: encap

envoy://main_internal direct-http ip=192.168.1.221 -> application-protocol='http/1.1' Cluster: encap

envoy://connect_terminate default ALL Inline Route:

envoy:// ALL Inline Route: /healthz/ready*

envoy:// ALL Inline Route: /stats/prometheus*

istioctl proxy-config clusters deploy/bookinfo-productpage-istio-waypoint

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

agent - - - STATIC

connect_originate - - - ORIGINAL_DST

encap - - - STATIC

main_internal - - - STATIC

productpage.default.svc.cluster.local 9080 http inbound-vip EDS

prometheus_stats - - - STATIC

sds-grpc - - - STATIC

xds-grpc - - - STATIC

zipkin - - - STRICT_DNS

istioctl proxy-config routes deploy/bookinfo-productpage-istio-waypoint

NAME VHOST NAME DOMAINS MATCH VIRTUAL SERVICE

inbound-vip|9080|http|productpage.default.svc.cluster.local inbound|http|9080 * /*

...

istioctl proxy-config routes deploy/bookinfo-productpage-istio-waypoint --name "inbound-vip|9080|http|productpage.default.svc.cluster.local" -o yaml

- name: inbound-vip|9080|http|productpage.default.svc.cluster.local

validateClusters: false

virtualHosts:

- domains:

- '*'

name: inbound|http|9080

routes:

- decorator:

operation: :9080/*

match:

prefix: /

name: default

route:

cluster: inbound-vip|9080|http|productpage.default.svc.cluster.local

maxStreamDuration:

grpcTimeoutHeaderMax: 0s

maxStreamDuration: 0s

timeout: 0s

istioctl proxy-config endpoints deploy/bookinfo-productpage-istio-waypoint

ENDPOINT STATUS OUTLIER CHECK CLUSTER

127.0.0.1:15000 HEALTHY OK prometheus_stats

127.0.0.1:15020 HEALTHY OK agent

envoy://connect_originate/ HEALTHY OK encap

envoy://connect_originate/192.168.1.221:9080 HEALTHY OK inbound-vip|9080|http|productpage.default.svc.cluster.local

envoy://main_internal/ HEALTHY OK main_internal

unix://./etc/istio/proxy/XDS HEALTHY OK xds-grpc

unix://./var/run/secrets/workload-spiffe-uds/socket HEALTHY OK sds-grpc

# L7 정책 적용 확인

# # Note that a deny at L7 is also providing a better user experience (with an explicit error message and a 403 response code).

# this should fail with an RBAC error because it is not a GET operation

kubectl exec deploy/sleep -- curl -s "http://$GATEWAY_HOST/productpage" -X DELETE

RBAC: access denied

# this should fail with an RBAC error because the identity is not allowed

kubectl exec deploy/notsleep -- curl -s http://productpage:9080/

RBAC: access denied

# this should continue to work

kubectl exec deploy/sleep -- curl -s http://productpage:9080/ | grep -o "<title>.*</title>"

<title>Simple Bookstore App</title>

L7 observability

# With waypoint proxy deployed for the web-api service, you automatically get L7 metrics for this service.

# For example, you can view the 403 response code from the web-api service’s waypoint proxy’s /stats/prometheus endpoint:

kubectl exec deploy/bookinfo-productpage-istio-waypoint -- curl -s http://localhost:15020/stats/prometheus

kubectl exec deploy/bookinfo-productpage-istio-waypoint -- curl -s http://localhost:15020/stats/prometheus | grep istio_requests_total

# You should get something similar to this:

istio_requests_total{reporter="destination",source_workload="sleep",source_canonical_service="sleep",source_canonical_revision="latest",source_workload_namespace="test",source_principal="spiffe://cluster.local/ns/test/sa/sleep",source_app="unknown",source_version="unknown",source_cluster="Kubernetes",destination_workload="web-api",destination_workload_namespace="test",destination_principal="spiffe://cluster.local/ns/test/sa/web-api-istio-waypoint",destination_app="unknown",destination_version="unknown",destination_service="web-api.test.svc.cluster.local",destination_canonical_service="web-api",destination_canonical_revision="v1",destination_service_name="web-api",destination_service_namespace="test",destination_cluster="Kubernetes",request_protocol="http",response_code="200",grpc_response_status="",response_flags="-",connection_security_policy="mutual_tls"} 1

# TYPE istio_requests_total counter

istio_requests_total{reporter="destination",source_workload="sleep",source_canonical_service="sleep",source_canonical_revision="latest",source_workload_namespace="test",source_principal="spiffe://cluster.local/ns/test/sa/sleep",source_app="unknown",source_version="unknown",source_cluster="Kubernetes",destination_workload="unknown",destination_workload_namespace="test",destination_principal="spiffe://cluster.local/ns/test/sa/web-api-istio-waypoint",destination_app="unknown",destination_version="unknown",destination_service="web-api.test.svc.cluster.local",destination_canonical_service="unknown",destination_canonical_revision="unknown",destination_service_name="web-api",destination_service_namespace="test",destination_cluster="Kubernetes",request_protocol="http",response_code="403",grpc_response_status="",response_flags="-",connection_security_policy="mutual_tls"} 2

istio_requests_total{reporter="destination",source_workload="sleep",source_canonical_service="sleep",source_canonical_revision="latest",source_workload_namespace="test",source_principal="spiffe://cluster.local/ns/test/sa/sleep",source_app="unknown",source_version="unknown",source_cluster="Kubernetes",destination_workload="web-api",destination_workload_namespace="test",destination_principal="spiffe://cluster.local/ns/test/sa/web-api-istio-waypoint",destination_app="unknown",destination_version="unknown",destination_service="web-api.test.svc.cluster.local",destination_canonical_service="web-api",destination_canonical_revision="v1",destination_service_name="web-api",destination_service_namespace="test",destination_cluster="Kubernetes",request_protocol="http",response_code="200",grpc_response_status="",response_flags="-",connection_security_policy="mutual_tls"} 1

Control Traffic - Link ⇒ 아직 미완성

- waypoint proxy를 통해 트래픽 제어 (비중 조정)

- Deploy a waypoint proxy for the review service, using the bookinfo-review service account, so that any traffic going to the review service will be mediated by the waypoint proxy.

# bookinfo-reviews service를 위한 waypoint proxy 배포

istioctl x waypoint apply --service-account bookinfo-reviews

# gateway.networking.k8s.io 정보 확인

kubectl get gtw

NAME CLASS ADDRESS PROGRAMMED AGE

bookinfo-productpage istio-waypoint 10.100.176.97 True 24m

bookinfo-reviews istio-waypoint 10.100.21.124 True 45s

# bookinfo-reviews service를 위한 waypoint proxy 파드 확인

kubectl get pod -l service.istio.io/canonical-name=bookinfo-reviews-istio-waypoint

NAME READY STATUS RESTARTS AGE

bookinfo-reviews-istio-waypoint-5d544b6d54-hm6g4 1/1 Running 0 9m3s

# sleep 에서 bookinfo-reviews 버전별 분산 접속 확인

kubectl exec deploy/sleep -- sh -c "for i in \$(seq 1 100); do curl -s http://$GATEWAY_HOST/productpage | grep reviews-v.-; done"

# 버전 별 비중 조정 정책 적용

# Configure traffic routing to send 90% of requests to reviews v1 and 10% to reviews v2:

kubectl apply -f istio-*/samples/bookinfo/networking/virtual-service-reviews-90-10.yaml

kubectl apply -f istio-*/samples/bookinfo/networking/destination-rule-reviews.yaml

# sleep 에서 bookinfo-reviews 버전별 분산(비중 조정 정책) 접속 확인

# 확인 Confirm that roughly 10% of the traffic from 100 requests goes to reviews-v2:

kubectl exec deploy/sleep -- sh -c "for i in \$(seq 1 100); do curl -s http://$GATEWAY_HOST/productpage | grep reviews-v.-; done"

kubectl exec deploy/sleep -- sh -c "for i in \$(seq 1 100); do curl -s http://$GATEWAY_HOST/productpage | grep reviews-v.-; done | sort | uniq -c | sort -nr"

94 <u>reviews-v1-86896b7648-sxspj</u>

6 <u>reviews-v2-b7dcd98fb-87h5b</u>

Extend waypoints with WebAssembly plugins - Docs

'스터디 > Istio Hands-on Study' 카테고리의 다른 글

| Istio Hands-on Study [1기] - 9주차 - Ambient Mesh (1) | 2025.06.06 |

|---|---|

| Istio Hands-on Study [1기] - 8주차 - VM Support & Istio Traffic Flow - 13장 (1) | 2025.05.31 |

| Istio Hands-on Study [1기] - 7주차 - 이스티오의 요청 처리 기능 확장하기 - 14장 (1) | 2025.05.25 |

| Istio Hands-on Study [1기] - 7주차 - 조직 내에서 이스티오 스케일링하기 - 12장 (2) | 2025.05.25 |

| Istio Hands-on Study [1기] - 6주차 - 데이터 플레인 트러블 슈팅하기 - 11장 (1) | 2025.05.18 |